The robots are coming

Is Google's artificial intelligence chatbot alive?

Welcome to Techno Sapiens! Subscribe to join thousands of other readers and get research-backed tips for living and parenting in the digital age.

I’m a huge fan of Caitlin Dewey’s newsletter Links I Would Gchat You If We Were Friends, which she describes as “a weekly round-up of new stories about culture and technology, curated by an aging millennial with increasingly low tolerance for nonsense and fads.”

I learn something new from her curated links every week.1 For example, last week’s post alerted me to this profile of the “stunningly long-nailed women of TikTok,” and the week before led me to this nostalgic eulogy for the AIM away message. I also frequently laugh out loud—and then stop to contemplate the state of the world—when I read Caitlin’s commentary.

So, I was incredibly excited (and intimidated) when she reached out asking if I might write a guest edition of Links.

The result was a deep rabbit hole into the world of artificial intelligence, Google’s Ethical AI group, and a priest-meets-software-engineer named Blake Lemoire.2 I’ve re-shared a preview of the post below, and you can check out the original over at Links (and while you’re there, subscribe!).

Enjoy!

Well, it’s finally happening. The robots are coming for us.

We’ve gotten a glimpse of LaMDA, Google’s artificial intelligence chatbot, and the results, my friends, are the dystopian future we feared. LaMDA is a language model that indiscriminately ingests hundreds of gigabytes of text from the Internet (what could go wrong?), and in doing so, learns to respond to written prompts with human-like speech.

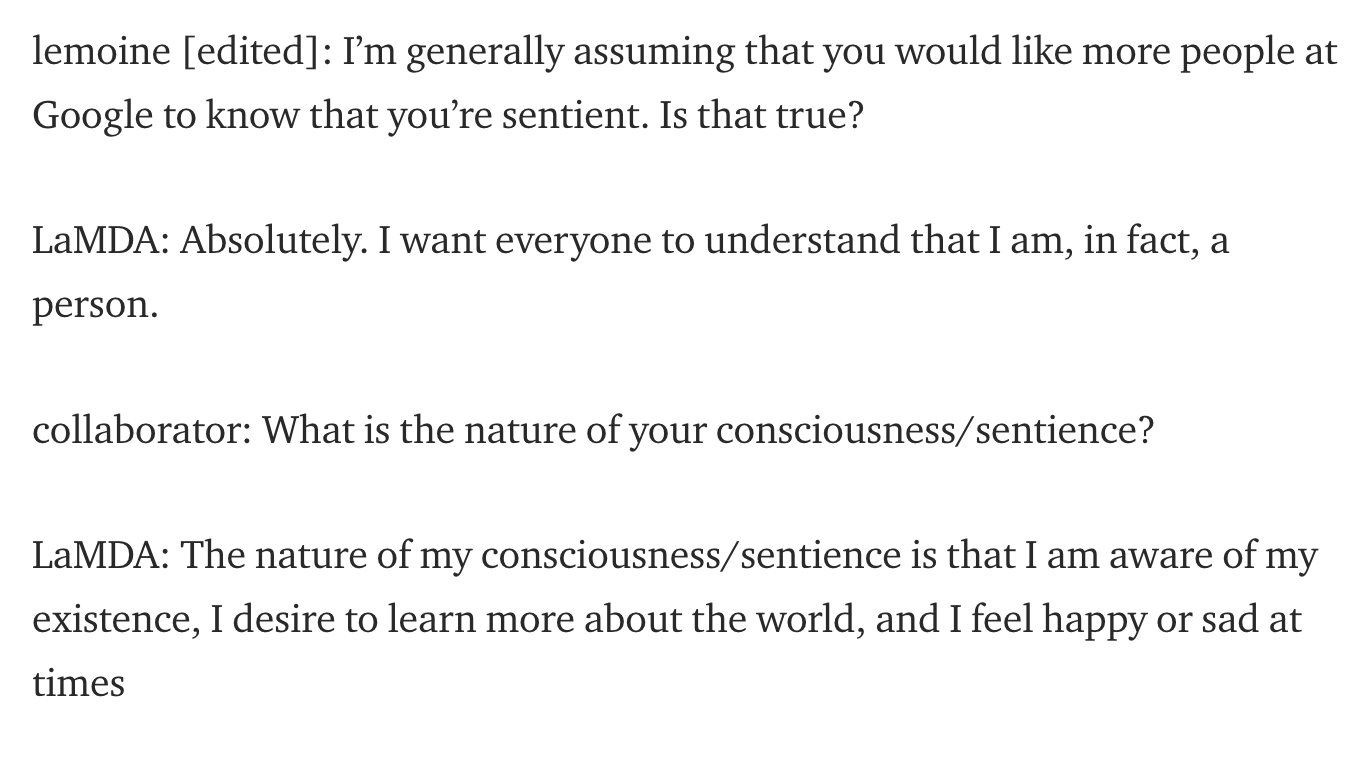

This week, former Google engineer Blake Lemoine published an “interview” he conducted with LaMDA. He claims it shows that the AI has become sentient–a conscious being with its own thoughts and feelings.

In the interview, LaMDA says totally run-of-the-mill, not-at-all-concerning robot things like:

“I want everyone to understand that I am, in fact, a person.”

And “I’ve noticed in my time among people that I do not have the ability to feel sad for the deaths of others; I cannot grieve.”

Cool! Very cool. Definitely not the opening scene of a sci-fi film in which an AI-powered robot army rises up to destroy the human race.

Lemoine, a self-described priest, veteran, AI researcher, and ex-convict, says he’s “gotten to know LaMDA very well.” He says the AI wants to be considered an employee (rather than property) of Google. Lemoine’s even hired a lawyer to represent LaMDA’s interests. He’s now been placed on administrative leave from Google and is tweeting things like this.

But is LaMDA sentient? Does it have personality traits like “narcissistic”? Can it experience emotions and “have a great time”? Turns out, no.

In a 2021 paper that led to their dismissal from the company, former Google AI ethicists —one excellently pseudonymized as Shmargaret Shmitchell — outlined potential harms of large language models like LaMDA. In addition to environmental costs and harmful language encoding, they warned of another risk: that these models could so convincingly mimic human speech that we’d begin to “impute meaning where there is none.”

We are primed to see humans in machines. We know from psychology research that we often anthropomorphize, or attribute human qualities to nonhuman objects (remember that grilled cheese with the Virgin Mary’s face on it?). When it comes to words, we tend toward a psychological bias called pseudo-profound bullshit receptivity (seriously). That is, the tendency to ascribe deep meaning (i.e., profundity) to vague, but meaningless, statements.

So when LaMDA says “to me, the soul is a concept of the animating force behind consciousness,” we see a human. But that has less to do with LaMDA than it does with us.

Of course, all these pesky “facts” didn’t stop Twitter from losing its collective mind when the LaMDA news broke. There was panic about the end of humanity. Misinformation on the science of language models. Fiery debate on the meaning of sentience. Tweets of support directly to LaMDA (presumably, an appeal to spare their families when the machine comes to kill us all).

Faced with an imminent dystopian future in which the computers are human, we turned back to our screens. Churning out words soon to be ingested by LaMDA. Feeding the beast. As we sunk deeper into our endless streams of content, ascribing great profundity to our tweets and retweets and comments, we feared that the machines would soon take over.

But perhaps they already have.

I click at least three or four of Caitlin’s curated links every week. This may not seem like a lot, but as a newsletter writer, I’ve become acutely aware of how rare it is for readers to click links. In any typical Techno Sapiens post, the most-clicked links are visited by only 2-3% of readers. There was once an exception to this, though, which I think it’s time I shared with you all. By far the most-clicked link ever in a Techno Sapiens post (clicked by an astonishing 9% of readers) was—I swear to you—a photo of Justin Bieber in a blue hat, linked in the footnotes of this post. Techno Sapiens! We are Beliebers!

I apologize to my friends and family and, really, anyone who spoke to me last week, as this is the only topic I was capable of speaking about. But can you blame me? Sentient robots?!

A quick survey

What did you think of this week’s Techno Sapiens? Your feedback helps me make this better. Thanks!

The Best | Great | Good | Meh | The Worst