New data on teens and AI

Let's get a vibe check

Welcome to Techno Sapiens! I’m Jacqueline Nesi, a psychologist and professor at Brown University, co-founder of Tech Without Stress, and mom of two young kids. If you like Techno Sapiens, please consider sharing it with a friend today. Thanks for your support!

5 min read

A few weeks ago, I came home to find my husband sitting at the kitchen counter with our sons, his laptop open before them. My husband’s fingers were poised on the keyboard, and our kids were looking on, eyes glued to the screen.

Now do a pirate with a peg leg, shaving with a rusty razor!!! Shouted our three-year-old.

Okay, my husband said, typing the words as he spoke them, Create an image of a pirate with a peg leg, sitting on a pirate ship, shaving with a straight razor. Make sure there is rust on the razor.

It was then they seemed to notice my arrival.

Our three-year-old looked up at me, face lit up with sheer delight. We’re making pirates!!!

My husband added, We’re using ChatGPT. And we’re, uh, really focused on the rusty razor thing…

A second later, and a collective cheer rang out as the image appeared on the screen.

Okay, our three-year-old said, Now do a pirate with a BEARD shaving with a rusty razor!!!

Our children are young, so their views of generative AI are pretty much entirely encapsulated by this scene (i.e., enthusiasm at depicting the lyrics of What Shall we do with a Drunken Sailor?)1

For teens, however, things are more nuanced.

When it comes to generative AI, the landscape is changing fast, and last month, we got two new reports worth digging into. Common Sense Media released Teens, Trust, and Technology in the Age of AI: Navigating Trust in Online Content (juicy!), and Pew Research Center shared new data on teens’ use and views of ChatGPT.

So, it’s time for a vibe check.2 What do teens think of generative AI? Are they using it? Do they trust it? How do they feel about the companies that build and employ this technology?

Let’s take a look.

Do teens know what ChatGPT is?

They do! Though many are not using it regularly.

79% of teens have heard of ChatGPT (as of fall 2024). This is up from 67% one year ago.

Data from last summer suggests that 51% of teens have ever used generative AI.

The number of teens who have used ChatGPT for schoolwork has doubled in the past year. 26% say they’ve used it for schoolwork (vs. just 13% one year ago).

For comparison, 23% of U.S. adults say they’ve ever used ChatGPT (as of spring 2024). Among young adults (ages 18-29), the number is higher (43%).

How do they feel about using AI for schoolwork?

Among teens that have used generative AI for schoolwork, teens are equally split on whether they’ve found the information to be accurate, with 39% saying they’ve found a problem or inaccuracy in the output, and 36% saying they have not.

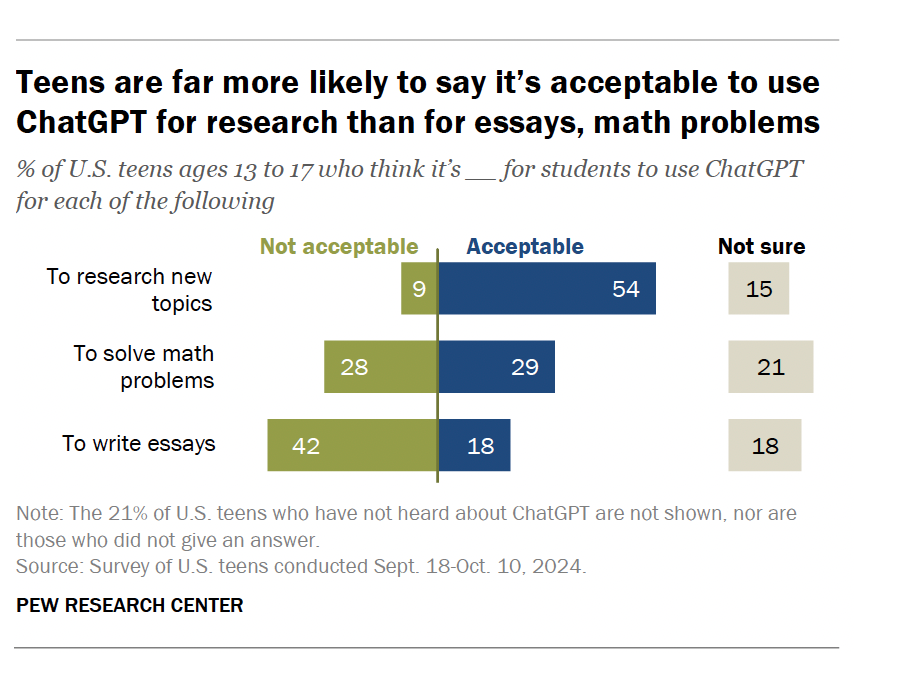

Teens’ views on when it’s acceptable to use ChatGPT vary by academic subject. 54% say it’s acceptable to research new topics, but only 18% to write essays.

Do they trust AI (and the companies that use it?)

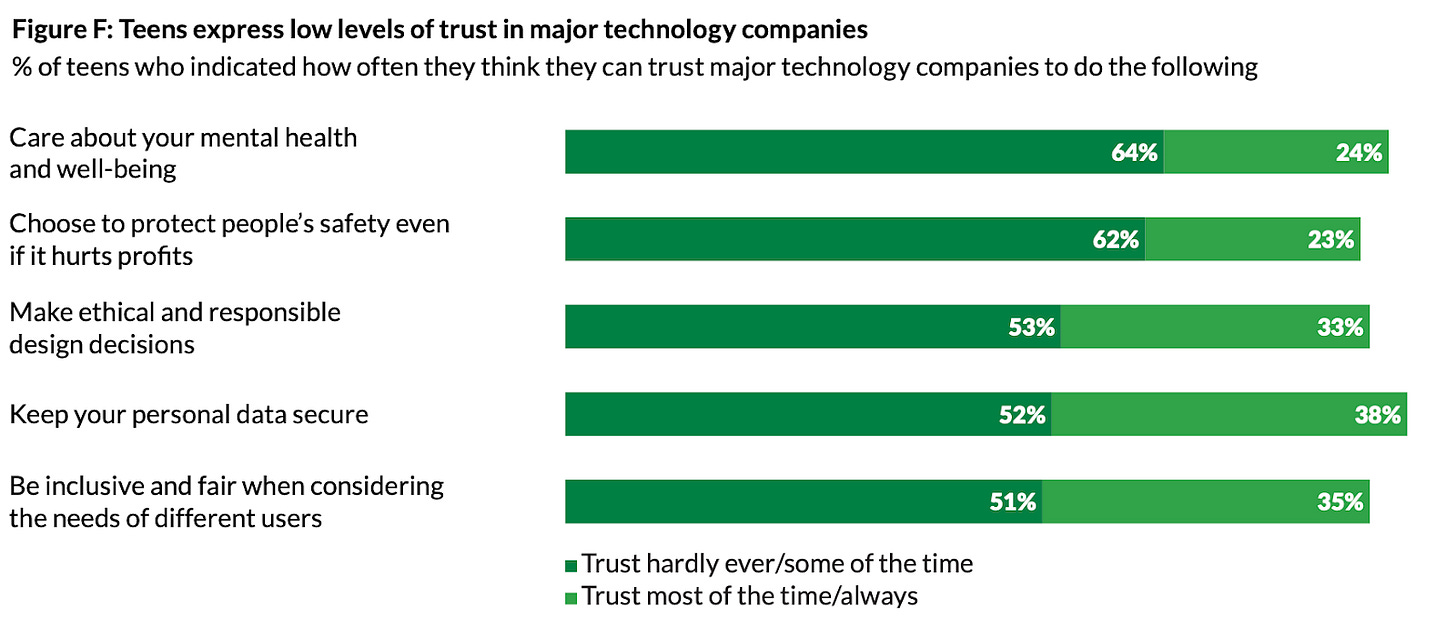

Teens are mixed in their sense of trust of AI and the companies who use it in their products.

35% of teens say that AI will make it harder to trust the accuracy of information they see online, but 22% say AI will make it easier and 24% say it will not change.

Similarly, teens are split on how much they feel they can trust tech companies to make responsible decisions about AI. 39% say they can trust companies “some” or “a great deal,” but 47% say “a little” or “not at all.”

Overall, teens’ trust in tech companies is somewhat low, especially when it comes to trust that companies care about their mental health and well-being, and that companies will choose to protect people’s safety at the expense of profits.

What do they think about AI safeguards?

Common Sense Media provided teens a list of proposed suggestions for “managing AI,” and asked how important (if at all) each was to them.

The majority of teens were in favor of these safeguards, for example:

74% said generative AI should have visible warnings that its outputs could be harmful, biased, or wrong

73% said AI-generated content should be labeled or watermarked to indicate that it was created by AI

61% said that companies that build generative AI should compensate creators of the content they use to train their software

Of note, according to Pew, 54% of U.S. adults say that generative AI programs should credit the sources they used to generate responses

AI-AI, Captain

This landscape is changing quickly.3 The large majority of teens (79%) now say they’ve heard of ChatGPT, and compared to just one year ago, the number who say they use it for schoolwork has doubled (26% vs. 13%). Many teens seem a bit skeptical of AI and the companies that use it, with the majority saying they do not trust Big Tech companies to prioritize the well-being of their users, and voting in favor of AI safeguards.

One thing that strikes me in this data, though, is the high percentage of teens reporting “not sure” in response to some of these questions. I don’t know what you were like as a teen, but I, personally, do not remember “I don’t know” being a strong part of my vocabulary.4 This suggests to me that teens really aren’t sure how to navigate this technology. For example:

21% say they’re “not sure” if it’s acceptable to use ChatGPT to solve school math problems

25% say they’re “not sure” if there’s been a problem or inaccuracy in a generative AI output they’ve gotten for schoolwork

21% say they’re “not sure” if they’ve seen images or videos that were real, but misleading

To me, this points to the need for teach kids skills around new tech—like, whether and when it’s appropriate to use new technology, how to spot misleading or inaccurate information, and how to critically evaluate what they’re seeing online. Maybe the challenge is that we adults need to learn these skills first.

A quick survey

What did you think of this week’s Techno Sapiens? Your feedback helps me make this better. Thanks!

The Best | Great | Good | Meh | The Worst

I know, I know. Regular readers are getting a little sick of the pirate and sea shanty references, but what can I tell you? Art imitates life? And life with a three-year-old who is passionate about his very niche interests marches on.

Look at us, techno sapiens! We are hip. We are trendy. We are using phrases like “vibe check,” and only Googling it a few times beforehand to clarify the definition, and still remaining only slightly unsure that we’re using it correctly! [Did I do it right? Young people, please advise.]

Okay, yes, “AI-AI, Captain” was a stretch, but you know I cannot resist a pun. Other options were “Mother, m-AI?” and “You don’t s-AI,” so I think we landed in an okay spot.

Note that a lack of willingness to say “I don’t know” is not limited to teens. Anyone who has ever interacted with other humans or tuned into the news knows that this occurs in adults, too. In fact, there’s a whole body of research on the concept of intellectual humility (i.e., recognizing the limits of one’s own knowledge). The research generally suggests that we (as individuals and as a society) are better off when we have higher levels of intellectual humility, so, as this helpful article states, “…next time you feel certain about something, you might stop and ask yourself: Could I be wrong?” Note that I’m very confident I’m right about this.

FYI, 'vibe check' is about what it FEELS like: happy, sad, scary, weird, inviting, off-putting. You were talking about data. Not so 'vibey'. . .

"AI-AI, Captain!" I guffawed. And yeah, soooooo much goes back to media literacy as an inoculation against current and future online malarkey.