10 amazing things AI can do now

Plus, the Techno Sapiens theme song you didn't know you needed

Hi! I’m Jacqueline Nesi, a psychologist and professor at Brown University, co-founder of Tech Without Stress, and mom of two young kids. Thanks for being part of Techno Sapiens. We’re now a community of over 20,000 smart, fun, and curious readers. If you like it here, please consider sharing with a friend!

PS: Are you interested in sponsoring a future edition of Techno Sapiens? Respond to this email or reach out to technosapiens.substack@gmail.com.

Today’s post is full of fun audio and videos! Click here to read in your browser for a better viewing experience.

7 min read

It was August 2012, and I was getting ready to move to North Carolina for graduate school. I talked to someone who’d once lived in Chapel Hill, and after the requisite Triangle-focused small talk (Yes, can’t wait! Yea, so much fun. A bar called He’s Not Here? I’ll have to check it out. No, not a basketball fan yet, but I will be soon!), they mentioned the weather. Hope you’re ready for those summers! I thought this was odd. I mean, of course, I was ready for the summers. Sure, I’d spent my entire life in the Northeast, but I’d been in hot, humid weather before. And yes, I knew the average summer highs were pushing 90 degrees, with among 80% humidity,1 but how bad could it be?

A couple weeks later, I remember opening my new apartment door to a feeling I’d only ever experienced when opening an oven—a sudden blast of heat to the face, the air as thick as syrup, sweat immediately seeping from every pore on my body—and thinking…oh, I get it now.2

There’s a big difference between knowing something in theory and experiencing it directly.

And this relates to AI, how…?

It’s been just over a year since ChatGPT launched to the public. Since then, AI has evolved incredibly fast. We’ve seen major technical developments, including increases in processing speed and potential context window size (i.e., the amount of information a model can process).

You, like me, may have heard about some of the novel, terrifying, and/or awe-inspiring things these models can now do. Or maybe you’ve heard rumblings of this whole [air quotes] “AI thing,” but haven’t quite gotten around to checking it out. Well, it’s one thing to know about these things in theory, and entirely another to experience them directly.

10 amazing things AI can do now

With that in mind, this week we’re testing out some new AI tools, focusing on those I’ve found to be particularly surprising or useful.

Here are 10 things AI can do now:

1. Write a song

Given a single, written prompt specifying topic and musical style, AI can write a full song, including tune and lyrics. I asked Suno to compose and sing an upbeat Techno Sapiens theme song (obviously), specifying that the newsletter was about psychology, technology, and parenting.3 I thus present to you:

Not bad! We are all about growin’, learnin’, and understandin’!

2. Create images

AI can create photos, too! Write a prompt describing the image you would like, and tools like DALL-E and Midjourney will generate it from scratch. I took to DALL-E to settle an age-old Techno Sapiens debate: (Would you rather fight one horse-sized duck or 100 duck-sized horses?). I asked it to create an image of said duck and horses, and voila!

After seeing this visual, I can confirm I am on team duck-sized horses. (That enormous duck is frightening).

3. Animate images

Start with a photo, and AI tools like Runway and HeyGen will turn it into video. Depending on the tool, this can happen based on your specific instructions for animation, or on its own. I used Runway, uploaded my headshot, and asked it to make my head turn to the side (and to animate the traffic in the background).

Here’s what it did:

Looks nothing like me, but very cool (and unsettling!) all the same.4

4. Create videos

If you don’t want to start with a photo, there’s also text-to-video. Simply write a prompt, and a few minutes later, a high-quality video is ready to go. OpenAI, creators of ChatGPT, recently announced a tool called Sora for this (Runway, HeyGen, and Pika work, too). Sora is not ready for use yet, but a few samples are available on the website. For example, this is the result of the prompt: A corgi vlogging itself in tropical Maui.5

5. Replace translators (and subtitles)

Here’s a wild application of this type of AI video generation. In January, Argentinian President Javier Milei gave a speech to the World Economic Forum in Spanish. Here’s a video of that same speech, happening in English. Milei used HeyGen to translate the speech, and then animate his face, so it looks like he is speaking English (with his own accent).

Of all the tools I tested, I found these video generation tools to be the most impressive. Of course, it’s also easy to see how tools like this could be used for more nefarious purposes. My hope is that the same advancements that led to these tools can also help us keep the risks in check.

6. Transform meetings

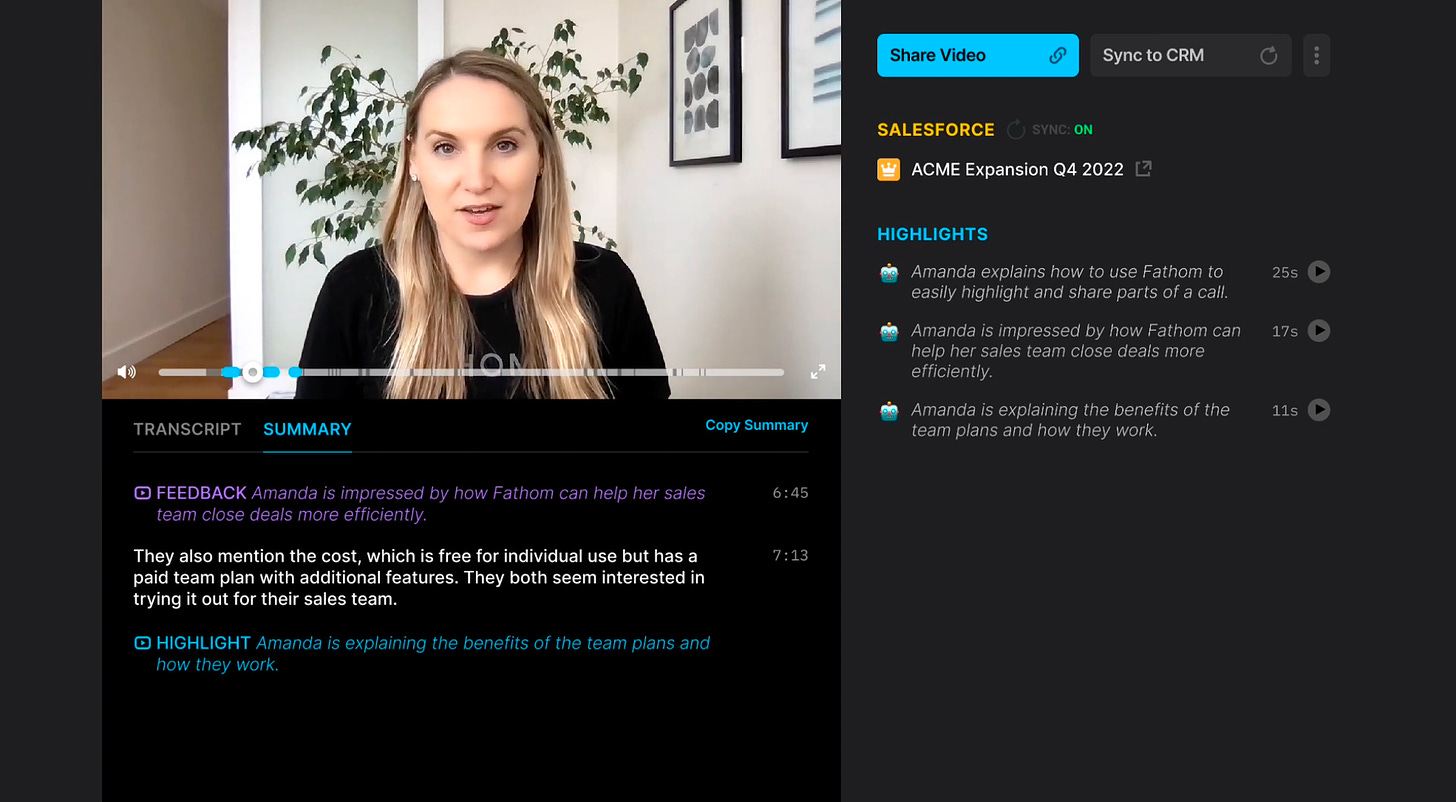

Gone are the days of note-taking, manual transcription, and asking didn’t we talk about that last week? Tools like Fireflies, Otter, and Fathom will record your (virtual) meetings, transcribe the conversation, summarize takeaways, and even allow you to search through your meeting archives for highlights. Here’s what this might look like in Fathom, for example:

7. Create presentations

Need a powerpoint for an upcoming presentation? Tools like Gamma, Tome, and Pitch will take a written prompt and turn it into a full slide deck. To test this one, I pasted this Techno Sapiens post (One Simple Parenting Trick) into the Gamma dialog box. It created this:

Where was this when I was in grad school?!

8. Turn your reading into audio

Need to quickly catch up on some reading? Tools like Recast will turn a written article into a short audio summary. For example, here is the two-minute audio summary of this Techno Sapiens post (A mindful approach to your phone).

9. Create social media content

We’re already seeing plenty of AI-generated social media content (what could possibly go wrong?), and tools like Piggy and Planoly show how easy this can be. I used Piggy, offering a simple prompt: “screen time for kids.” The result was this seven-slide story (perfectly sized for Instagram).

10. Analyze emotions in video and voice

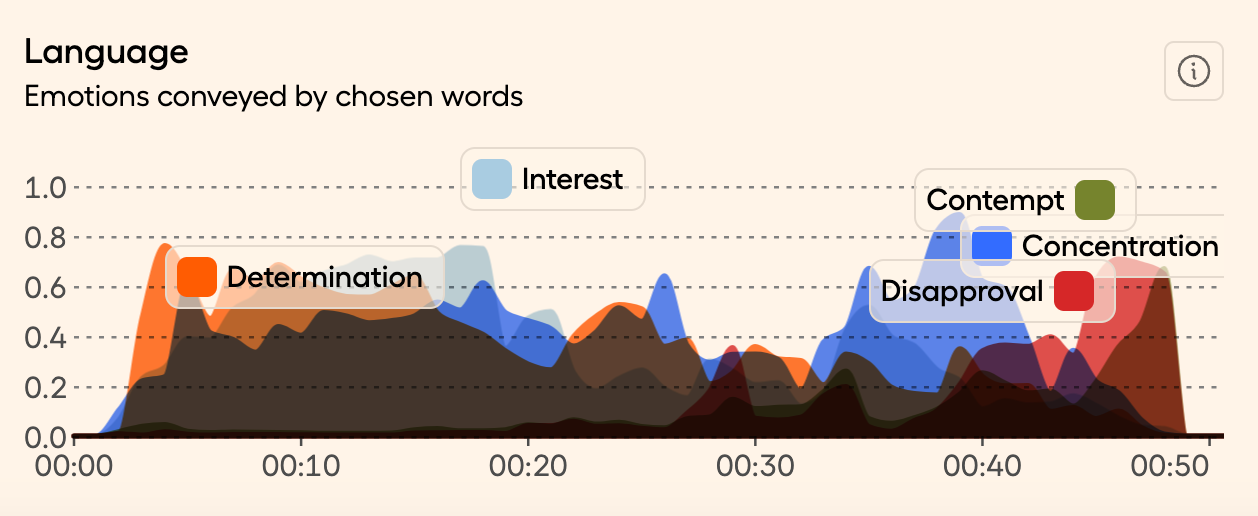

For many years, the ‘gold standard’ system for analyzing facial expression in psychology has been something called the Facial Affect Coding System. It involves researchers watching video of individuals’ facial expressions and noting every muscle movement—from “lip corner depression” to “upper eyelid raise.” It takes people 50 to 100 hours to learn how to do it.

Now, AI can do this in seconds. Given a video, Hume can identify the emotions expressed in both facial expressions and voice. Here I am, testing it on my own face/voice (with a video I recorded for @techwithoustress):

Though I now feel bad for the researchers who spent months of their lives learning the original Facial Affect Coding System (100 hours!!!),6 this feels like a major step forward for the field.

The future is now

Generative AI is quickly transforming our lives. Of course, there are risks, and one of the major challenges of the next few years (and beyond) will be figuring out how to use it responsibly. At the same time, I think it’s worth taking a step back to appreciate just how powerful this technology can be and how quickly it’s evolved. We’re living in the future, sapiens!

A good first step? Try it out for yourself. Maybe with some background music (….may I suggest our new anthem?).

Hey, come on now, join the conversation (come on, join the conversation). Techno Sapiens, we're your information station (information station)!

A quick survey

What did you think of this week’s Techno Sapiens? Your feedback helps me make this better. Thanks!

The Best | Great | Good | Meh | The Worst

It’s a defining moment when you realize that, after the sun goes down, it’s still not going to cool down. This took me years to internalize. Having never lived in a climate as humid as North Carolina, I’d spend everyday of the summer thinking we’d reach a moment when the real-feel would finally dip below 95 degrees, and that moment never came.

I should clarify that I *loved* living in Carrboro, North Carolina (right next to Chapel Hill, for those unfamiliar). I loved the people, the energy, the walking trails, even the weather. And the food. I dream about the biscuits and grits at the Carolina Inn Sunday brunch buffet, the bacon at Root Cellar, the coffee from Open Eye...(I also got a PhD while living there. I don’t dream about that.)

Does the photo animation remind anyone else of the magical portraits in Harry Potter? Is that just my millennial showing?

The prompt for this text-to-video provided by Sora is: A corgi vlogging itself in tropical Maui. I love how the video turned out, but I can’t help but wonder if this is grammatically correct. Does one “vlog oneself”? I assumed it was just “vlog”? “Make a vlog”? Never mind. I’m going to go newsletter myself.

If you are a person who spent 100 hours learning the Facial Affect Coding System…are you okay? Please get in touch. We are thinking of you during this difficult time.

I'm an audio transcriber and while I fully expect the robots to steal my job (which is poorly paid and unsatisfying), we're not there yet!